I've loved the product! It's given me the peace of mind that I wouldn't have gotten without having to spin up my own remote server. It's brilliant! The service works so well that I often forget I'm even using it.

Mark Evans

CEO App Launchers, LLC

Using AIProxySwift has been a game-changer for me. It’s incredibly easy to use and install, and since I started using it, I’ve had no more suspicious activity on my OpenAI account. Highly recommend for anyone looking to optimize their AI solutions with a real support.

Maxime Maheo

Apptico - Chatbot: AI Chat + AssistantSecurity, observability and control

Security

AIProxy uses a combination of split key encryption, DeviceCheck and certificate pinning to prevent your key and endpoint from being stolen or abused.

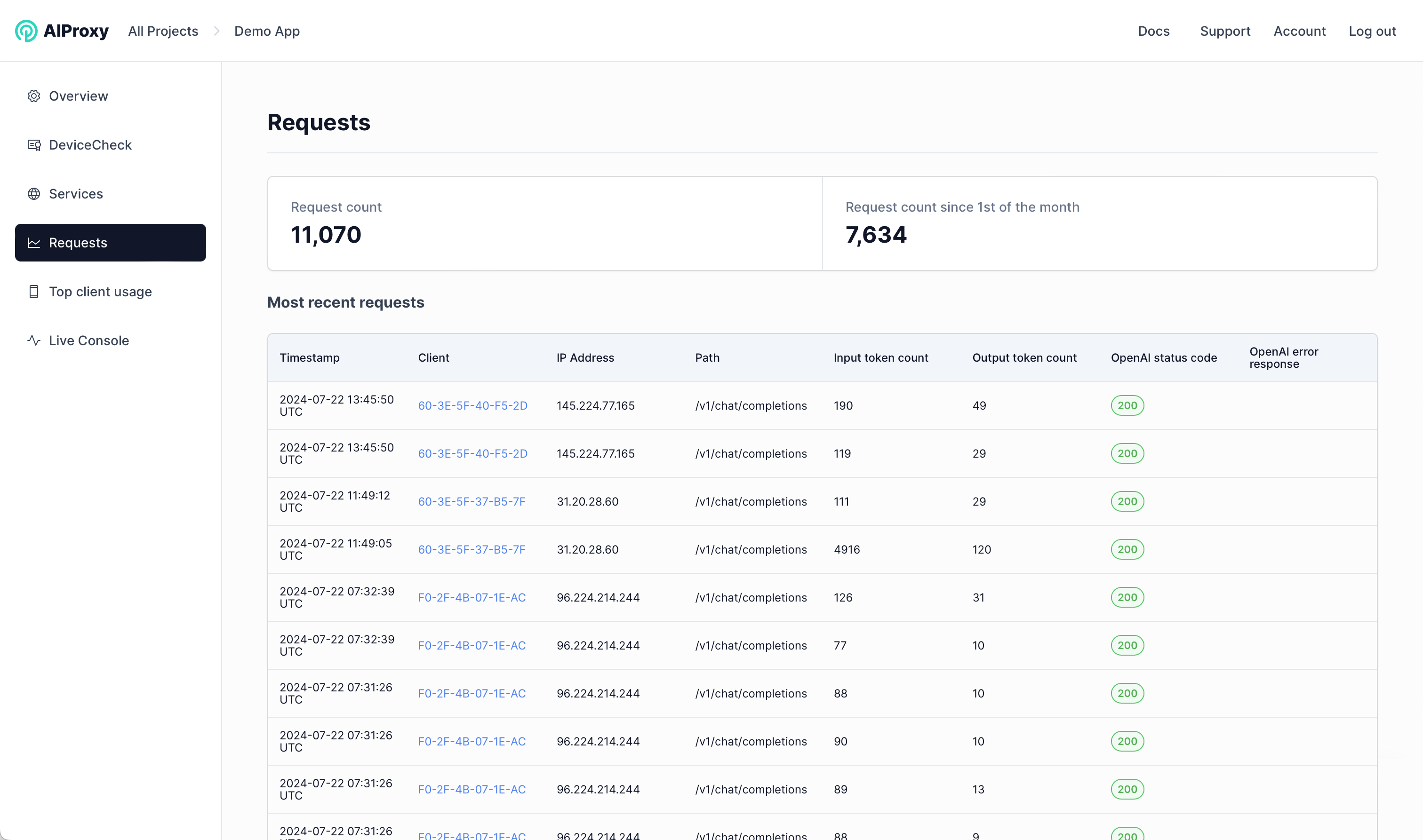

Monitoring

Our dashboard helps you keep an eye on your usage and get a deeper understanding of how users are interacting with AI in your app.

Rate Limits & Model Overrides

Want to change your API calls from gpt-3.5-turbo to gpt-4o? No problem! You can change models and rate limits right from the dashboard without updating your app.

Live Console

Use the live console to test your API calls from your app. Find errors and get a better understanding of performance.

Notifications

Get alerts when there's suspicious activity so you can take quick action. We'll keep you informed so that you can stay protected.

Built to Scale

Built on AWS, our service horizontally scales to meet demands. You can be confident that your proxy will continue to run no matter what.

What customers say...

Maxime Maheo

Chatbot: AI + Assistant

Mark Evans

CEO App Launchers

Shihab Mehboob

Bulletin

Juanjo Valiño

Wrapfast

Hidde van der Ploeg

Helm

James Rochabrun

SwiftOpenAI

Tirupati Balan

Amigo Finance

Sam McGarry

Cue

Luca Lupo

iirc_ai

Emin Grbo

@r0black

Mario

PlantIdentify

Arjun

@dotarjunResources to get you started

One swift lib to connect to any AI API.

View on GitHub

Sample apps to help you get started.

View Resources

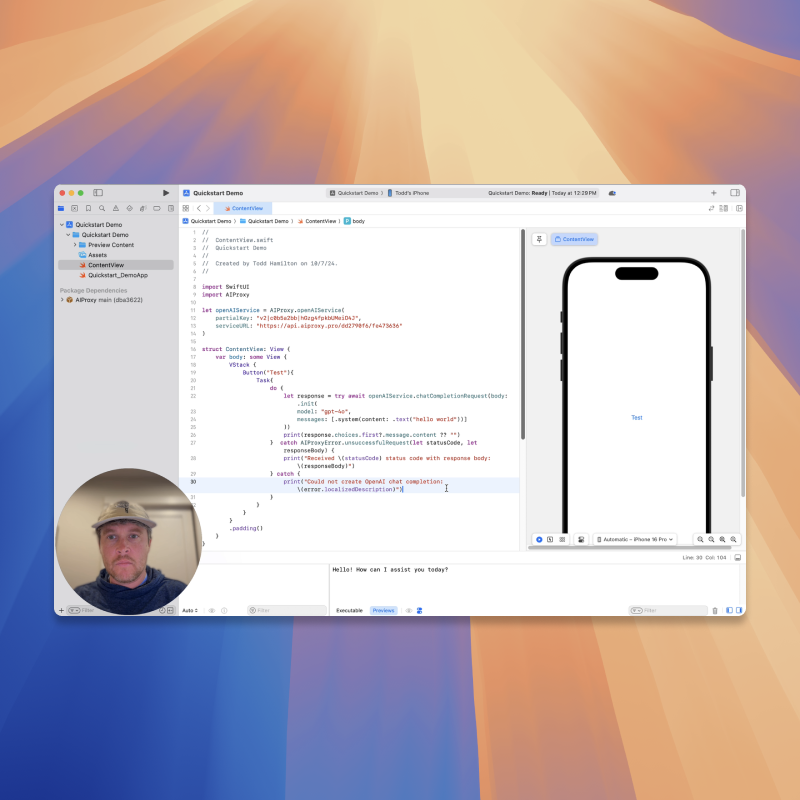

Learn how to integrate in just a few minutes.

View VideoProtect the APIs your app depends on

Integrate with your app in minutes.

FAQs

Have more questions?

No, we don't actually store any customer API keys. Instead, we encrypt your key and store one part of that encrypted result in our database. On its own, this message can't be reversed into your secret key. The other part of the encrypted message is sent up with requests from your app. When the two pieces are married, we derive your secret key and fulfill the request.

The key we provide you is useless on its own and can be hardcoded in your client. When you add an OpenAI key in our dashboard we don't store it on our backend. We encrypt your key and store only half, and give you the other half which you use in your client. We combine these two pieces and decrypt when a request gets made.

We have multiple mechanisms in place to restrict endpoint abuse:

1. Your AIProxy project comes with proxy rules that you configure. You can enable

only endpoints that your app depends on in the proxy rules section. For example, if your app

depends on /v1/chat/completions, then you would permit the proxying of requests to that

endpoint and block all others. This makes your enpdoint less desireable to attackers.

2. We use Apple's DeviceCheck service to ensure that requests to AIProxy originated from your

app running on legitimate Apple hardware.

3. We guarantee that DeviceCheck tokens are only used once, which prevents an attacker from

replaying a token that they sniffed from the network.

The proxy is deployed on AWS Lambda, meaning we can effortlessly scale horizontally behind a load balancer.

Upon configuring your project in the developer dashboard, you'll receive initialization code to drop into the AIProxySwift client.